-

Posted on

5 kubectl plugins to make your life easier

I have been using Kubernetes for five years, but only very recently started using plugins to enhance my kubectl commands. I will show you five plugins that help me avoid repetitive tasks, make cluster administration simpler, and incident response less stressful. All the plugins presented in this article are installable using Krew.

Photo by Iker Urteaga Note for Mac users

If you’re using an ARM Mac, most of the plugins I mention will appear uninstallable when using Krew. It is generally because the plugin authors didn’t release a mac-arm64 build. But you can install the mac-amd64 builds, which work as well, by overriding the KREW_ARCH environment variable. For example:

KREW_ARCH=amd64 kubectl krew install janitorTail

Logging pods through

kubectl logs -fis always a good way to know what a running pod is doing. Sadly, I never manage to remember how to make it log multiple pods at once. The tail plugins solve that, by giving us a set of helper functions to easily stream the logs of a group of pods. For example, it can retrieve logs from all the pods created by a Job, or all the pods attached to a Service:❯ k tail --job=logging-job default/logging-job-xtx4s[busybox-container]: My log ❯ k tail --svc=mikochi default/mikochi-69d47757f6-9nds7[mikochi]: [GIN] 2023/07/27 - 12:31:16 | 200 | 496.098µs | 10.42.0.1 | GET "/api/refresh" default/mikochi-69d47757f6-9nds7[mikochi]: [GIN] 2023/07/27 - 12:31:16 | 200 | 10.347273ms | 10.42.0.1 | GET "/api/browse/" default/mikochi-69d47757f6-9nds7[mikochi]: [GIN] 2023/07/27 - 12:31:16 | 200 | 9.598031ms | 10.42.0.1 | GET "/api/browse/" default/mikochi-69d47757f6-9nds7[mikochi]: [GIN] 2023/07/27 - 12:31:19 | 200 | 193.686µs | 10.42.0.1 | GET "/ready"Janitor

Janitor is a kubectl plugin that allows you to list resources in a problematic state. Instead of battling with grep, it gives you access to commands to automatically list unhealthy, unready, or unscheduled Pods, failed Jobs, pending PVCs, and or unclaimed PVs. This is helpful when examining a cluster during an incident, as it can directly point you toward ongoing issues.

❯ k janitor pods status STATUS COUNT Running 4 Error 6 ImagePullBackOff 1 ❯ k janitor pods unhealthy NAME STATUS AGE failing-job-ln7rf Error 4m40s failing-job-vbfqd Error 4m33s failing-job2-kmxqm Error 4m30s failing-job-cjbt6 Error 4m27s failing-job2-grwcn Error 4m23s failing-job2-s842x Error 4m17s my-container ImagePullBackOff 17m ❯ k janitor jobs failed NAME REASON MESSAGE AGE failing-job BackoffLimitExceeded Job has reached the specified backoff limit 4m46s failing-job2 BackoffLimitExceeded Job has reached the specified backoff limit 4m36sNeat

Neat is a simple utility to remove generated fields from the command output. You can use it by simply piping the output of

kubectl getintokubectl neat. This makes for a more readable output and is very convenient if you want to save the yaml to create a new resource.❯ k get pod -o yaml mikochi-69d47757f6-9nds7 apiVersion: v1 kind: Pod metadata: creationTimestamp: "2023-07-21T12:30:58Z" generateName: mikochi-69d47757f6- labels: app.kubernetes.io/instance: mikochi app.kubernetes.io/name: mikochi pod-template-hash: 69d47757f6 name: mikochi-69d47757f6-9nds7 namespace: default ....... ❯ k get pod -o yaml mikochi-69d47757f6-9nds7 | k neat apiVersion: v1 kind: Pod metadata: labels: app.kubernetes.io/instance: mikochi app.kubernetes.io/name: mikochi pod-template-hash: 69d47757f6 name: mikochi-69d47757f6-9nds7 namespace: default .......View-secret

Since the data inside Secrets is base64 encoded, reading them often results in a mix of

kubectl get,jq, andbase64 -d. The view-secret plugin aims at simplifying that, by allowing you to directly read and decrypt values from secrets.❯ k view-secret mikochi username [CENSORED] ❯ k view-secret mikochi password [ALSO CENSORED]Node-shell

If you want to directly access a node, finding the node IP, using SSH with the right RSA key, etc… can make you lose precious time during an incident. But it is possible to obtain a root shell from a (privileged) container using nsenter. The node-shell plugin leverages this to give you access to the nodes in a single kubectl command:

❯ k node-shell my-node spawning "nsenter-qco8qi" on "my-node" If you don't see a command prompt, try pressing enter. root@my-node:/# cat /etc/rancher/k3s/k3s.yaml apiVersion: v1 clusters: - cluster: ....... -

Posted on

Is technical analysis just stock market astrology?

Technical analysis is a part of finance that studies price moves to guide investment decisions. A lot of investors seem skeptical of the use of past price data, which leads to technical analysis often being perceived as similar to astrology. In this article, I will try to see if it can provide an edge to long-term investors and if it beats reading the horoscope.

Photo by Chris Liverani Moving Average Crossover

There are hundreds of different technical analysis strategies and indicators out there. For this article, I decided to pick what seemed to be one of the simplest: SMA crossovers.

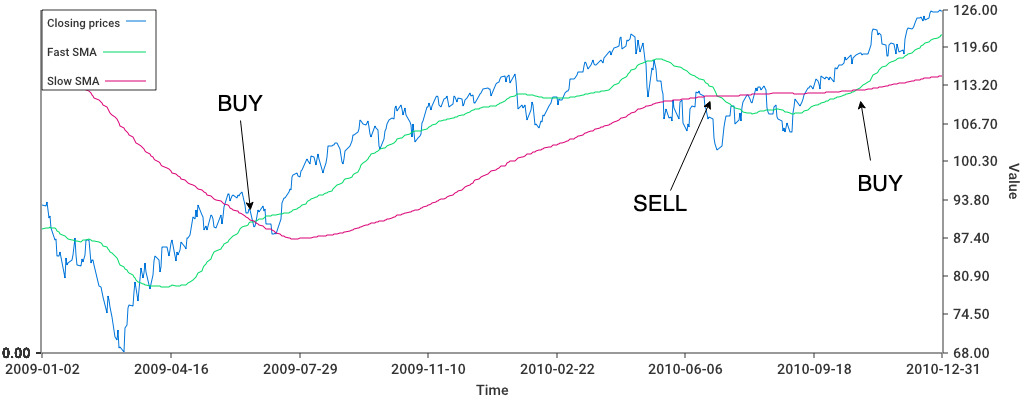

Simple Moving Averages (SMA) are, as the name suggests, just an average of past closing prices. The SMA Crossover strategy uses two moving averages, a “fast” one (50 days) and a “slow” one (200 days), and compares them to decide on buying or selling an asset. If the fast SMA is above the slow one, we should buy and hold the stock, and if this condition inverts, we should sell. It is a heuristic around momentum, which is the idea that if an asset price starts rising, we can jump on the bandwagon and hope it will continue its trajectory.

I have backtested an SMA crossover strategy on the SPY ETF, one of the most traded passive investment funds in the world. My backtest makes the following assumptions:

- we do not pay any transaction fees

- we will obtain exactly the close price when buying or selling

- dividends received while holding stocks are immediately reinvested

- cash yields no interests

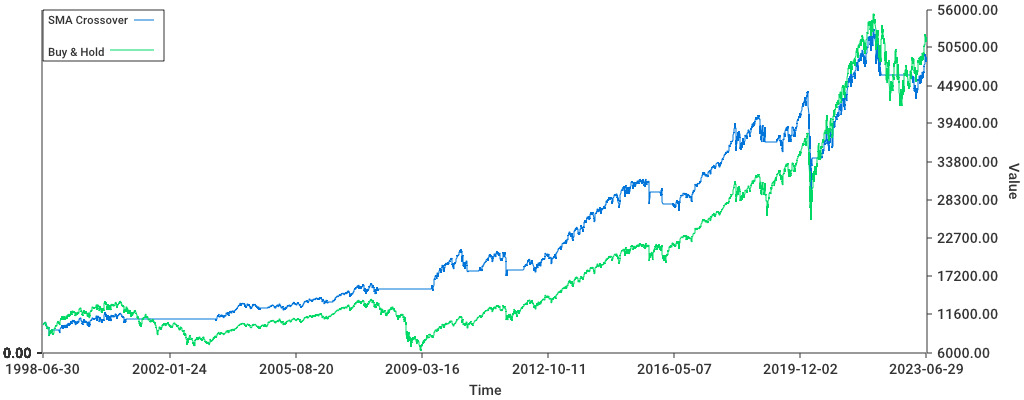

While someone who would have bought 10.000$ of SPY in July 1998 and continuously held the stock would have ended up with 52.030$ in June 2023 (6.78% annualized), an investor using the SMA crossover strategy would have made ended up with 49.127$. (6.54%). Even without accounting for transaction costs, the SMA crossover strategy doesn’t provide a significant over-performance to simply buying and holding over a long period.

Does this mean that this strategy is completely useless?

Actually no, a closer look at the data will show that the SMA crossover strategy allows investors to avoid extended drawdowns, like the 2008 crisis. This significantly reduces the risk taken by investors: the yearly standard deviation of the SMA crossover strategy is only 13%, against 19% for the buy and hold strategy. Since returns didn’t decrease as much as risk, this leads to high risk-adjusted returns, as measured by the Sharpe ratio: 0.43 for passive investing vs 0.53 for SMA crossovers.

But what about astrology?

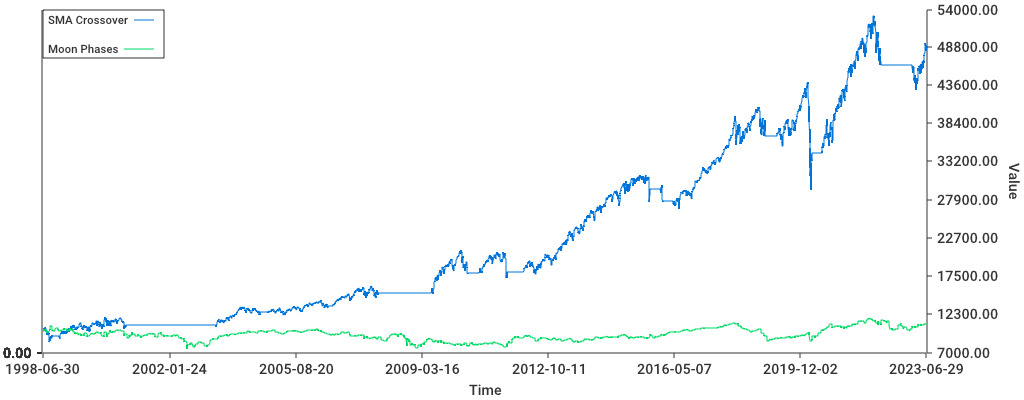

Of course, the subject of this article wasn’t to compare technical analysis to buy and hold, but to astrology. I was surprised to learn that financial astrology was actually a thing and that there were also a lot of astrology-related strategies out there. I decided to implement a strategy based on lunar cycles since it was one of the clearest about when to buy and when to sell.

The strategy goes as follows: we purchase SPY on a new moon, and re-sell it on the next full moon. And repeat that every lunar month.

Clearly, it fails at beating SMA crossovers. Or at doing basically anything, an investor using the moon phase strategy starting with 10.000$ would end up with only 11.110$ and a Sharpe ratio of only 0.09.

Conclusion

Similarly to diversification, simple technical analysis strategies can be used to minimize investment risk, without necessarily reducing profits by the same amount. There’s however no guarantee that a strategy that worked in the past will continue working in the future. It is also likely that there exist smarter momentum indicators than moving averages, but you’ll have to do your own backtests for those.

-

Posted on

Introducing Mikochi: a minimalist remote file browser

Like many people working in DevOps, I have taken the bad habit to keep playing with servers and containers in my free time. One of the things I have running is a Media Server, which I use to access my collection of movies and shows (that I evidently own and ripped myself). To make my life easier with this, I have built a web application that allows me to browse, manage, and stream/download files. It is called Mikochi and received its first stable release last week.

Problem statement

My media server was initially running Jellyfin. It is a pretty nice piece of software that probably fits the need of many people. Sadly for me, it focuses a lot on areas I didn’t care about (metadata, transcoding, etc) while being lackluster on classic file management.

The features I need is to have basic FTP-like management from a browser. This means it needs to list the content of folders and allow navigation between them while allowing to download, rename, delete, and upload files.

In addition to that, I also wanted a search function that could lead me to any file/directory in the server.

Since it’s replacing a media server, the last requirement was streaming. I do not use streaming in the browser much (since it doesn’t always support fancy codecs like HEVC), so I just needed to be able to read it from a media player like VLC or MPV, which is easier.

Frontend

One of my aims in this project was to get back into frontend development since I didn’t touch a line of JavaScript in a while. For this project, I decided to use Preact, a React alternative weighing only 3kb.

Preact was a great surprise. I expected a framework that small to be too good to be true, but it works well. I didn’t experience any trouble learning it since it is almost the same API as React and didn’t encounter any performance issues or unexplainable crashes. I will definitely try to use it again for future projects.

The complete JS bundle size ends up being ~36kb, barely more than the icon pack that I use.

The character who gave this software its name Backend

The backend was made using Go, which has been one of my main languages for the past 5 years. I used the Gin framework to handle the regular HTTP boilerplate, which worked admirably.

The only pain point I had was re-implementing JWT authentication. I had decided to not use a library for that because I felt that, it might not handle an edge case well: I need tokens passed in GET params for streaming requests, since VLC isn’t going to write a

Authorizationheader. It’s not particularly complex but it is a lot of code.I had the good surprise that streaming files “just works” in a single line of code:

c.File(pathInDataDir)Running it

If you’re interested in trying out Mikochi, it can be launched with just a Docker image:

docker run \ -p 8080:8080 -v $(PWD)/data:/data \ -e DATA_DIR="/data" -e USERNAME=alicegg \ -e PASSWORD=horsebatterysomething zer0tonin/mikochi:latestCompiled binaries are also available on GitHub. And for those who love fighting with Ingresses and PersistentVolumeClaims, there’s a helm chart available.

-

Posted on

Specialization considered harmful

It is sometimes recommended that software engineers should learn “depth-first”, and seek to specialize early in their careers. I think his advice is misguided. In my opinion, having a wide range of knowledge is in many cases more important than being extremely good at a very specialized task. I will use this article to make the case for avoiding specialization as a software engineer.

Photo by Kenny Eliason It’s not just about practice hours

A common misconception when learning a new skill is that, since it might take 10,000 hours to master it, the best thing to do is to start practicing as early as possible and with as much focus as possible. Reality is however not as simple.

It may be true that just putting in a lot of focused practice hours will lead to amazing results in problems that are very constrained in scope (like chess). However, for subjects that have a very broad, and frequently evolving set of problems, experience working on very diverse subjects will often perform better than intense specialization.

One of the reasons behind that is that many problems that are at first sight unrelated will have similar patterns. Being exposed to a wide variety of problems allows you to see a lot of potential patterns between problems.

This is why history has many records of people achieving breakthroughs in many fields. For example, Benoit Mandelbrot first noticed the concept of fractal by studying probability distributions in financial markets, he managed to find an application of the concept to many patterns that appear in nature, such as coastlines, clouds, and blood vessels.

Tech changes, fast

Many people underestimate how fast the world of software engineering can change. Some extremely popular concepts like “DevOps” were pretty much not a thing 10 years ago. 20 years ago, I doubt anyone would have known what differentiated a “frontend developer” from a “backend developer”. Even if you zoom on very specific technologies, things are changing every year: React code written in 2023 doesn’t have much in common with React code written in 2015.

Photo by Lorenzo Herrera Being a generalist, allows you to adapt much faster to change, it can be seen as a way of “learning to learn”. Once you have been exposed to many problems and solutions, picking up new tools and adapting to changes in the field becomes easier and easier.

There’s more to software engineering than code

Most importantly, learning the ins and out of a programming language and tech stack is not what brings value. Software engineering is the art of using computers to solve problems. Those problems are generally not about computers, but involve businesses and people. In my experience, this is a point that is easy to miss when over-focusing on the depth of a specific technology.

This is also where a lot of people who make a career change and transition late into the tech industry have an edge. They can compensate for their late start by being more aware of the reality of business and the needs of organizations.

-

Posted on

Why diversification matters for long-term investors? Meet Shannon's Demon

Any introduction to finance will mention that diversification is extremely important. Intuitively, it is easy to understand that diversification reduces risks. If I own stocks in two companies, and one of them goes bankrupt, I lose less than if I had invested all my money in it. However, what appears less intuitive is that diversification itself will increase investment portfolio returns. This phenomenon is known as Shannon’s Demon, from the name of its inventor Claude Shannon, also famous for his work on cryptography.

Photo by micheile dot com Let’s take the following scenario: I am an investor who can purchase two assets with completely random and unpredictable returns. My crystal ball is broken, so I cannot know in advance which of the two assets will perform better. They can be modeled by a random walk. Any change to my investment portfolio will cost a 1% transaction fee.

I can use the following two strategies:

- put 100% of my money in one of the two assets and hope it performs well

- put 50% of my money in each asset and rebalance the portfolio every 6 months to keep each position at 50%

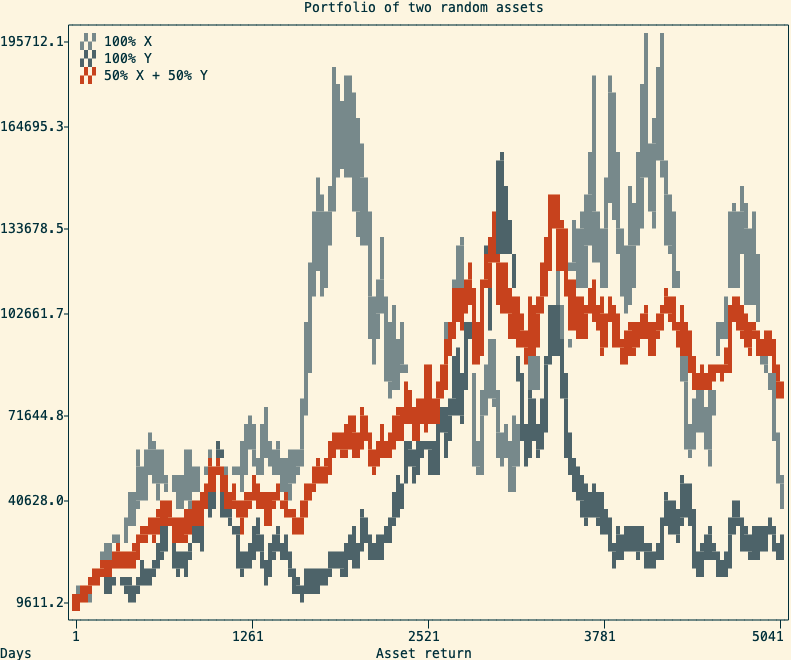

A cherry-picked example of the return of two random assets and a balanced portfolio (in red) I made a Monte-Carlo experiment that simulates the above scenario 100,000 times over 20 years (5040 trading days). After inspecting the final returns, I observed the following:

- in nearly 100% of outcomes, the balanced portfolio beats investing everything in the worst-performing asset

- in 70% of outcomes, the balanced portfolio also beats investing everything in the best-performing asset

Explanation

The second observation might be surprising, but can be easily explained. The trick is that regular rebalancing will create a mechanical way to “buy low and sell high”.

Let’s illustrate it step by step:

- at the start, I own 500 of asset X, for a value of 10$ each, and 500 of Y for the same value

Name Price Position Value X 10$ 500 5,000 Y 10$ 500 5,000 - after 6 months, my position in X experienced explosive growth and now trades at 50$, while Y is stable at 10$

Name Price Position Value X 50$ 1000 25,000 Y 10$ 500 5,000 - I rebalance the portfolio, and now own 15,000$ in X and 15,000$ in Y

Name Price Position Value X 50$ 300 15,000 Y 10$ 1,500 15,000 - 6 months later, X performed poorly and is back at 10$, while Y is now worth 20$

Name Price Position Value X 10$ 300 3,000 Y 20$ 1,500 30,000 - I rebalance, and now own 16,500$ in X and 16,500$ in Y

Name Price Position Value X 10$ 1,650 16,500 Y 20$ 825 16,500 Over the last scenario, my portfolio gained 23,000$. If I had invested everything into Y, I would have gained only 10,000$. And if I had bough and held X over the same time, I would not have made any profit.

Caveat 1: correlated assets

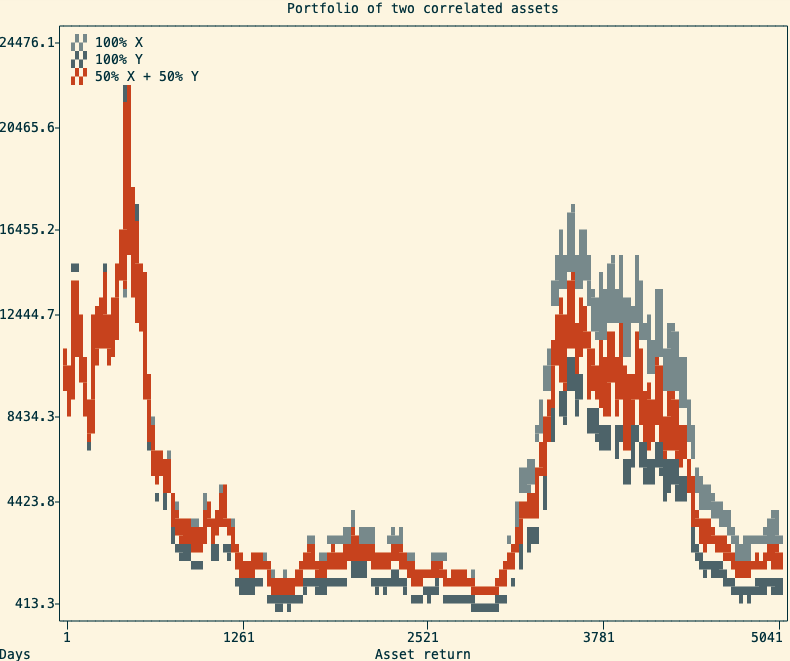

One important thing to consider is that this only work with assets that are not positively correlated. A positive correlation means that both assets will move up at the same time, and down at the same time. In this situation, the balanced portfolio will still perform better than the worst of the two assets, but will most of the time underperform the best-performing asset.

The return of two positively correlated assets and a balanced portfolio (in red) containing both In practice, many asset returns are very correlated with each other. For example, in the stock market, we can observe periods where the large majority of stocks tend to move up (bull markets) or down (bear markets). This makes the creation of a diversified stock portfolio more complicated than just randomly picking multiple stocks to purchase. This is also why it is often advised to diversify across multiple asset classes (ie, stocks, bonds, commodities, and cash).

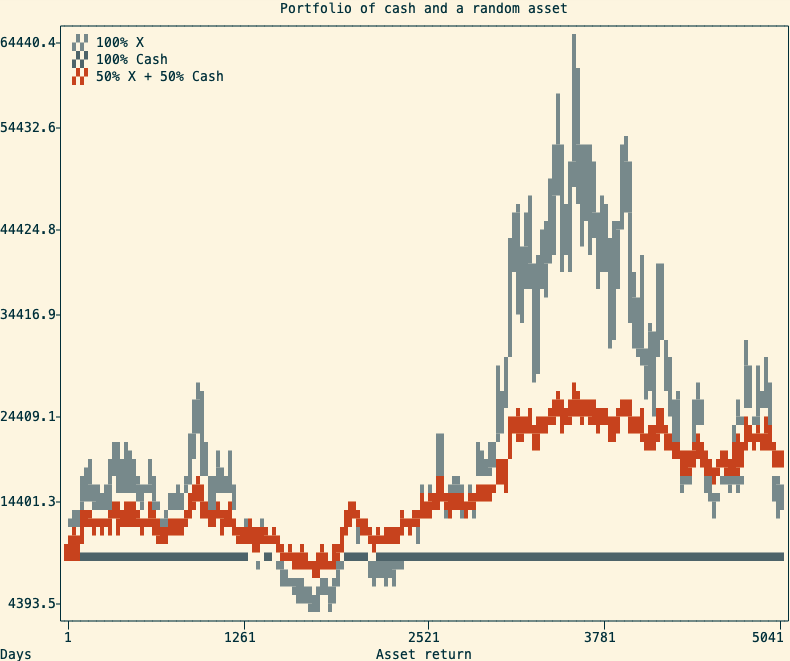

A common way to make use of Shannon’s Demon is to use an asset with a stable price (ie. a money market fund) alongside a volatile one. A portfolio with 50% cash and 50% in a volatile asset will not only cut the risk in half but sometimes outperform being 100% in a single volatile asset. Simulating 10000 random walks over 20 years, in 91% of cases, the balanced portfolio beats investing everything in the random walk.

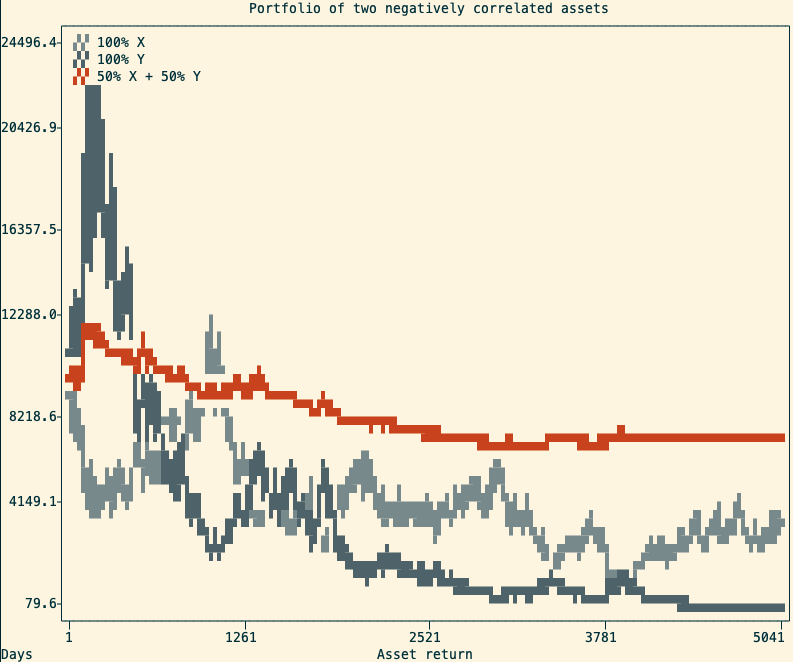

A simulation of a stable and a volatile asset and the resulting balanced portfolio An alternative use of Shannon’s Demon is to profit from negatively correlated positions. This can be done by taking two positively correlated assets and being long on one, and short on the other. This strategy is called pair trading, and it is not something you should try at home.

An example of two negatively correlated assets and the resulting portfolio Caveat 2: real-life is not as simple

Obviously, there’s more to portfolio management than just diversification and rebalancing. First, the 50/50 repartition I used in this article is rarely optimal. The common calculation used for optimal position sizing is the Kelly criterion, which would need an entire article dedicated to it to cover it properly.

In previous examples, I used a fixed 6 months period between rebalances. I chose this period for completely arbitrary reasons. An optimized rebalancing strategy would need to take into account asset variances, investment time-frames, and transaction costs to determine the rebalancing intervals.

Lastly, while simulations using random walks are a useful mathematical tool, they might not reflect real market conditions. A portfolio composed of assets that are likely to depreciate over time is unlikely to be profitable, no matter how diversified it is. This is why asset purchases should be carefully researched, eventually with the help of a professional financial advisor (not me).

subscribe via RSS